The following is a refresh of a previous post in 2016.

You’ve just made the decision to adopt one of the Jazz solutions from IBM. Of course, being the conscientious and proactive IT professional that you are, you want to ensure that you deploy the solution to an environment that is performant and scalable. Undoubtedly you begin scouring the IBM Knowledge Center and the latest System Requirements. You’ll find some help and guidance on Deployment and installation planning and even a reference to advanced information on the Deployment wiki. Unlike the incongruous electric vehicle charging station in a no parking zone, you are looking for definitive guidance but come away scratching your head still unsure of how many servers are needed and how big they should be.

This is a common question I am often asked, especially lately. I’ve been advising customers in this regard for several years now and thought it would be good to start capturing some of my thoughts. As much as we’d like it to be a cut and dried process, it’s not. This is an art not a science.

My aim here is to capture my thought process and some of the questions I ask and references I use to arrive at a recommendation. Additionally, I’ll add in some useful tips and best practices.

I find that the topology and sizing recommendations are similar regardless of whether the server is to be physical or virtual, on-prem or in the cloud, managed or otherwise. These impact other aspects of your deployment architecture to be sure, but generally not the number of servers to include in your deployment or their size. One exception is that managed cloud environments often start lower than the recommended target since those managing understand how to monitor the environment, look for indicators that more resources are needed and can quickly respond to increasing demands.

From the outset, let me say that no matter what recommendation I or one of my colleagues gives you, it’s only a point in time recommendation based on the limited information given, the fidelity of which will increase over time. You must monitor your Jazz solution environment. In this way you can watch for trends to know when a given server is at capacity and needs to scale by increasing system resources, changing the distribution of applications in the topology and/or adding a new server. See Deployment Monitoring for some initial guidance. Since 6.0.3, we have added capabilities to monitor Jazz applications using JMX MBeans. Enterprise monitoring is a critical practice to include in your deployment strategy.

Before we even talk about how many servers and their size, the other standard recommendation is to ensure you have a strategy for keeping the Public URI stable which maximizes your flexibility in changing your topology. We’ve also spent a lot of time deriving standard topologies based on our knowledge of the solution, functional and performance testing, and our experience with customers. Those topologies show a range in number of servers included. The departmental topology is useful for a small proof of concept or sandbox environment for developing your processes and procedures and required configuration and customization. For most production environments, a distributed enterprise topology is needed.

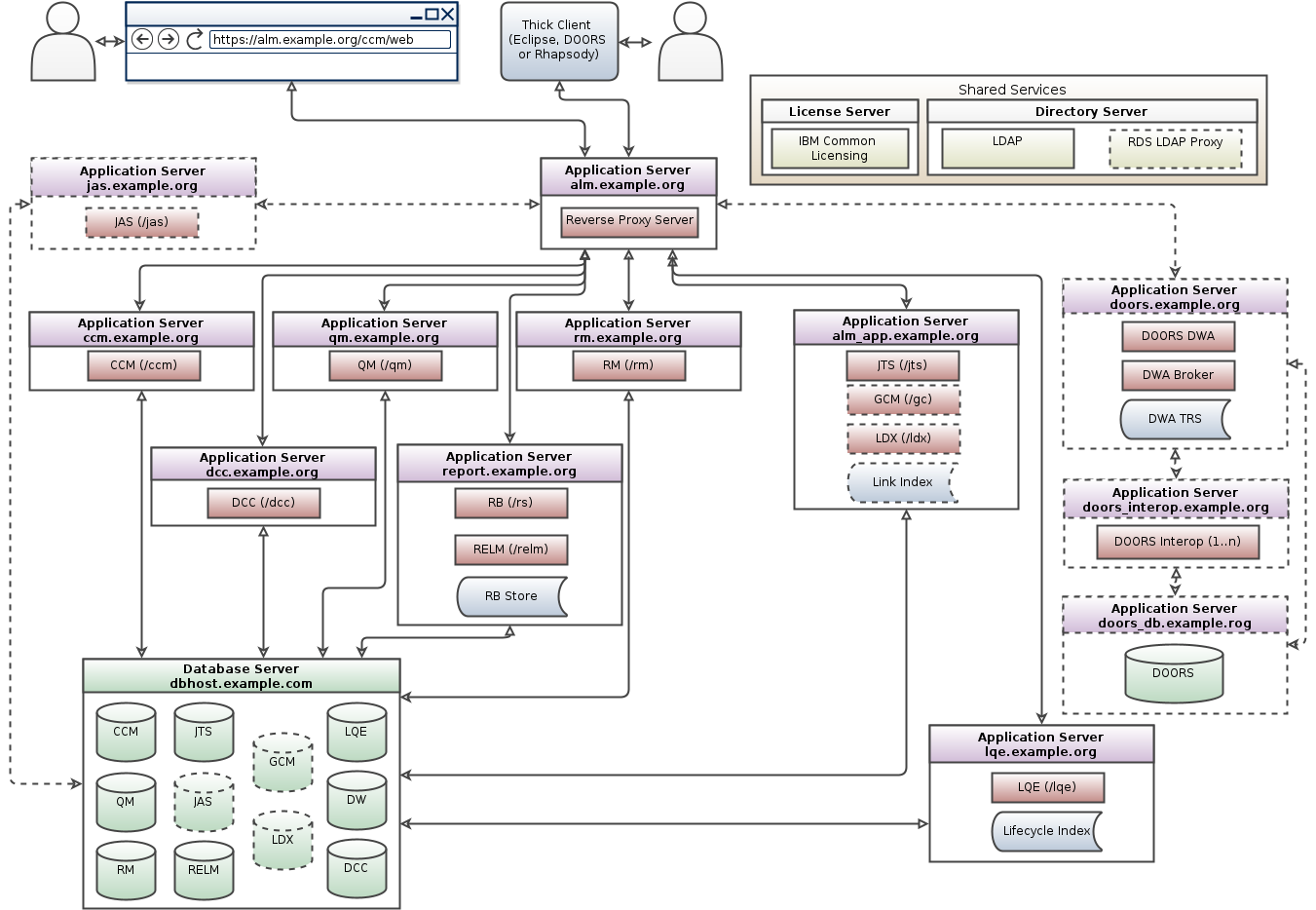

The tricky part is that the enterprise topology specifies a minimum of 8 servers to host just the Jazz-based applications, not counting the Reverse Proxy Server, Database Server, License Server, Directory Server or any of the servers required for non-Jazz applications (IBM or 3rd Party). For ‘large’ deployments of 1000 users or more that seems reasonable. What about smaller deployments of 100, 200, 300, etc. users? Clearly 8+ servers is overkill and will be a deterrent to standing up an environment. This is where some of the ‘art’ comes in. I find more often than not, I am recommending a topology that is some where between the department and enterprise topologies. In some cases, a federated topology is needed when a deployment has separate and independent Jazz instances but needs to provide a common view from a reporting perspective and/or for global configurations, in case of a product line strategy. The driving need for separate instances could be isolation, sizing, reduced exposure to failures, organizational boundaries, merger/acquisition, customer/supplier separation, etc.

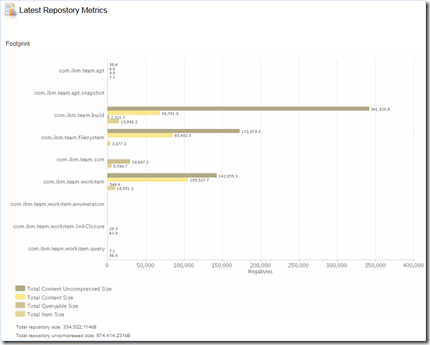

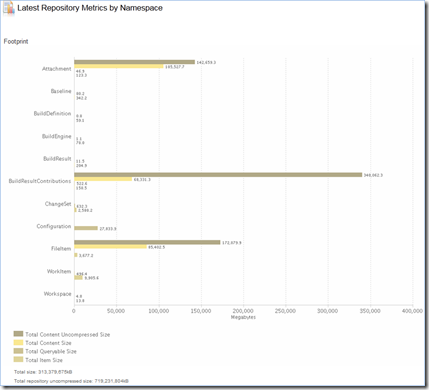

The other part of the ‘art’ is recommending the sizing for a given server. Here I make extensive use of all the performance testing that has been done, including the following.

- CLM Sizing Strategy for the 6.0 release

- Performance test results for Rational DOORS Next Generation 6.0.6 ifix3 with Components

- Rational DOORS Next Generation: Organizing requirements for best performance

- Collaborative Lifecycle Management performance report: Rational Quality Manager 6.0.6.1

- CLM 6.0 Performance Report for Global Configuration and link indexing services

- Rational DOORS 9 to Rational DOORS Next Generation migration sizing guide

- Deployment Topologies forJazz Reporting Service

- Performance summary and guidance for the Data Collection Component in Rational Reporting for Development Intelligence

- Best Practices for Configuring LQE For Performance and Scalability

- Performance and Data Scalability of Rational Rhapsody Design Manager

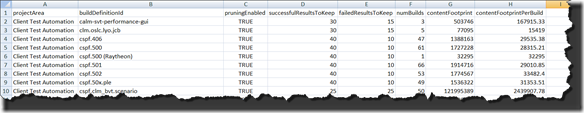

The CLM Sizing Strategy provides a comfortable range of concurrent users that a given Jazz application can support on a given sized server for a given workload. Should your range of users be higher or lower, your server be bigger or smaller or your workload be more or less demanding, then you can expect your range to be different or to need a different sizing. In other words, judge your sizing or expected range of users up or down based on how closely you match the test environment and workload used to produce the CLM Sizing Strategy. Concurrent use can come from direct use by the Jazz users but also 3rd party integrations as well as build systems and scripts. All such usage drives load so be sure to factor that into the sizing. There are other factors such as isolating one group of users and projects from another, that would motivate you to have separate servers even if all those users could be supported on a single server.

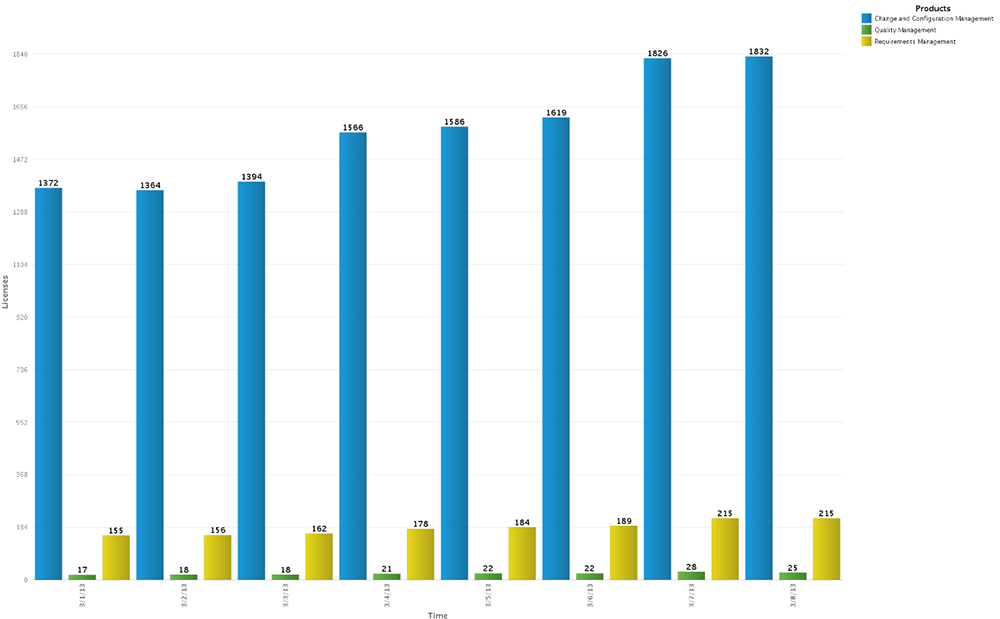

Should your expected number of concurrent users be beyond the range for a given application, you’ll likely need an additional application server of that type. For example, the CLM Sizing Strategy indicates a comfortable range of 400-600 concurrent users on a CCM (Engineering Workflow Management) server if just being used for work items (tracking and planning functions). If you expect to have 900 concurrent users, it’s a reasonable assumption that you’ll need two CCM servers. Scaling a Jazz application to support higher loads involves adding an additional server, which the Jazz architecture easily supports through multi-server or clustering topology patterns. Be aware though that there are some behavioral differences and limitations when working with the multi-server (not clustered) pattern. See Planning for multiple Jazz application server instances and its related topic links to get a sense of considerations to be aware of up front as you define your topology and supporting usage models. As of 6.0.6.1, application clustering is only available with the CCM application.

This post doesn’t address other servers likely needed in your topology such as a Reverse Proxy, Jazz Authorization Server (which can be clustered), Content Caching Proxy and License Key Server Administration and Reporting tool. Be sure to read up on those so you understand when/how they should be incorporated into your topology. Additionally, many of the performance and sizing references I listed earlier include recommendations for various JVM and application settings. Review those and others included in the complete set of Performance Datasheets and Sizing Guidelines. It isn’t just critical to get the server sizing right but the JVM and application properly tuned.

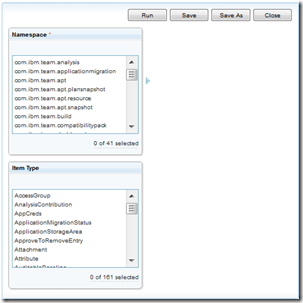

To get to the crux of the primary question of number of servers and their size, I ask a number of questions. Here’s a quick checklist of them.

- What Jazz applications are you deploying?

- What other IBM or 3rd party tools are you integrating with your Jazz applications?

- How many total and concurrent users by role and geography are you targeting and expect to have initially? What is the projected adoption rate?

- What is the average latency from each of the remote locations?

- How much data (number of artifacts by domain) are you migrating into the environment? What is the projected growth rate?

- If adopting IBM Engineering Workflow Management, which capabilities will you be using (tracking and planning, SCM, build)?

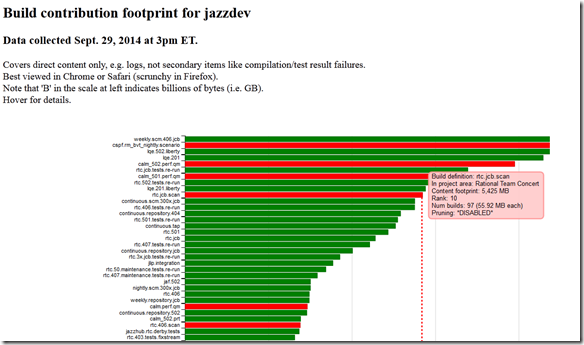

- What is your build strategy? frequency/volume?

- Do you have any hard boundaries needed between groups of users, e.g. organizational, customer/supplier, etc. such that these groups should be separated onto distinct servers?

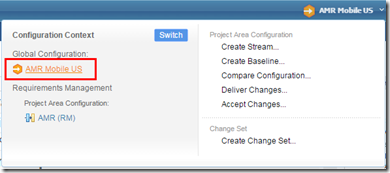

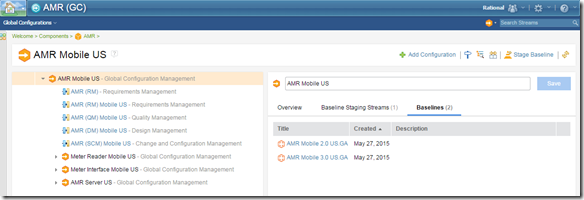

- Do you anticipate adopting the global or local configuration management capability?

- Do you make significant use of resource-intensive scenarios?

- What are your reporting needs? document generation vs. ad hoc? frequency? volume/size?

Most of these questions primarily allow me to get a sense of what applications are needed and what could contribute to load on the servers. This helps me determine whether the sizing guidance from the previously mentioned performance reports need to be judged higher or lower and how many servers to recommend. Other uses are to determine if some optimization strategies are needed.

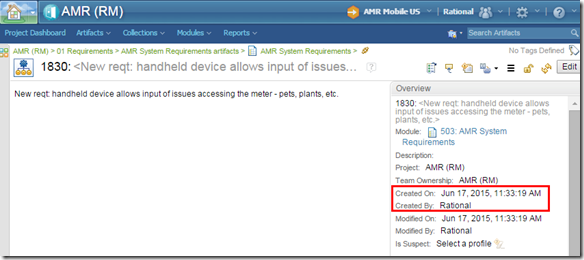

As you answer these questions, document them and revisit them periodically to determine if the original assumptions, that led to a given recommended topology and size, have changed and thus necessitate a change in the deployment architecture. Validate them too with a cohesive monitoring strategy to determine if the environment usage is growing slower/faster than expected or detect if a server is nearing capacity. Another good best practice is to create a suite of tests to establish a baseline of response times for common day to day scenarios from each primary location. As you make changes in the environment, e.g. server hardware, memory or cores, software versions, network optimizations, etc., rerun the tests to check the effect of the changes. How you construct the tests can be as simple as a manual run of a scenario and a tool to monitor and measure network activity (e.g. Firebug). Alternatively, you can automate the tests using a performance testing tool. Our performance testing team has begun to capture their practices and strategies in a series of articles starting with Creating a performance simulation for Rational Team Concert using Rational Performance Tester.

In closing, the kind of guidance I’ve talked about often comes out in the context of a larger discussion which looks at the technical deployment architecture in a more wholistic perspective, taking into account several of the non-functional requirements for a deployment. This discussion is typically in the form of a Deployment Workshop and covers many of the deployment best practices captured on the Deployment wiki. These non-functional requirements can impact your topology and deployment strategy. Take advantage of the resources on the wiki or engage IBM to conduct one of these workshops.